Tangible Web Layout Design for Blind and Visually Impaired People

Using a Tangible User Interface (TUI) for accessible web design

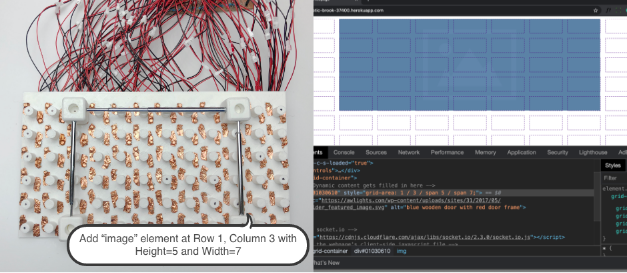

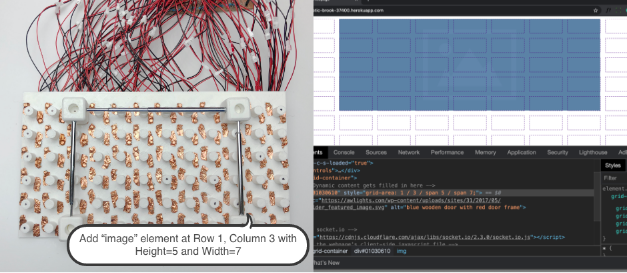

Hardware prototype of the TUI tool along with the generated output

Paper published, UIST Conference '22 (26.3% acceptance rate)

Paper published, UIST Conference '20 (58.9% acceptance rate)

Honorable Mention: Jenny Preece and Ben Shneiderman Award for Excellence, University of Maryland, 2020

Project details

This is an independent research conducted for my MS-HCI thesis. Subsequently, after my graduation, my thesis advisor was able to continue this project with other students

Abstract

Blind and visually impaired (BVI) people typically consume web content by using screen readers. However, there is a lack of accessible tools that allow them to create the visual layout of a website without assistance. Recent work in this space enables blind people to edit existing visual layout templates, but there is no way for them to create a layout from scratch. This research project aims to implement a 3D printed tangible user interface (TUI) tool, namedSparsha, for website layout creation that blind users can use without assistance.

I conducted a semi-structured interview followed by a co-design session with a blind graduate student who is also an accessibility researcher. Based on the elicited insights and designs, I implemented the final system using 3D printed tactile elements, an Arduino-powered sensing circuit and a Web server that renders the final HTML layout, provides feedback and can be used to populate content.

The Problem

The on-going democratization of assistive technologies is improving the quality of life for millions of people who identify as blind or visually impaired (BVI). Devices like screen readers, tactile printers, and braille displays have also led to new employment opportunities for BVI people. Recent accessible programming tools make it possible for BVI developers to write code to express logic and algorithms and as a result, the number of blind programmers has been steadily rising in the last few years (based on StackOverflow's Annual Developer Survey). Although BVI developers are now able to write code, they still lack accessible ways to define the visual layouts, for instance, the layout of an HTML webpage. Lacking direct feedback about the size and the position of elements, BVI developers can only write code to specifywhat content they create, but do not understand where to put them andhow they look.

Approach

Ability-based design (Wobbrock et al.) is an approach that advocates shifting the focus of accessible design from disability to ability. Designers should strive to leverage all that their target audience can do. Blind users use their sense of touch to "navigate and negotiate the world" in their daily life. Previous studies have revealed that blind people have higher tactile acuity than sighted people (Cattaneo et al.). Based on this perspective, I see value in a tool that can support this tactile perceptual ability by using a Tangible User Interface (TUI).

The Process

The design process

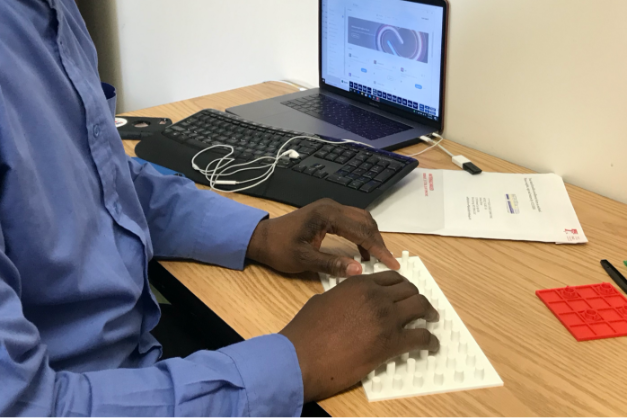

Employing a user-centered research method, I started with a semi-structured interview with a blind participant to understand the challenges BVI people face as well as potential solutions that they have explored (if any). Based on the interview, I prepared a list of low-fidelity physical probes and artifacts that could potentially help BVI developers in layout creation. Following the Participatory Design approach, these physical probes were then used in a co-design session with the blind participant. The behavioral evidence collected from the co-design session, as well as the interview with the BVI user guided the design of the initial prototype of Sparsha.

Co-design session with the blind participant.

Sparsha - The TUI Web Design tool

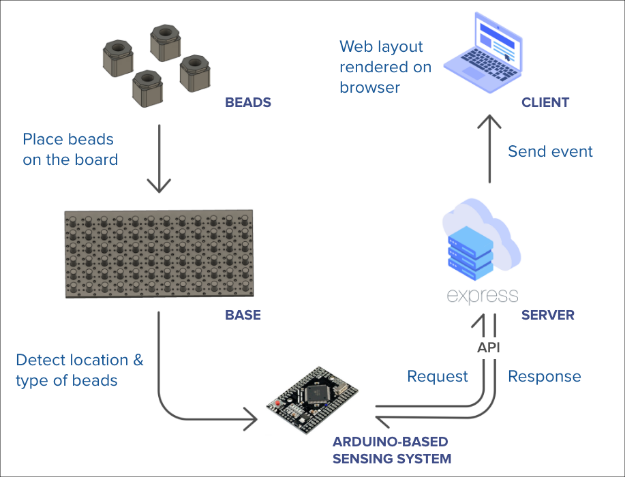

System diagram for the proposed solution.

Hardware prototype of the TUI tool along with the generated output

When a user places 4 beads of a type on the board (in the shape of a rectangle due to the edge constraints), they snap on to the board due to magnetic contact points. An Arduino-based sensing circuit attached to the base helps identify the type, and location of the beads. It then sends this information over WiFi to the web server which in turn sends an event to the client browser to render an HTML element of the specified type at the specified location. The web application also gives audio feedback to confirm the placement of the correct HTML element at the correct location.

Published Papers

UIST Conference '20 | UIST Conference '22